LOLMIL: Living Off the Land Models and Inference Libraries

.png)

Kuang Grade Mark Eleven was a superintelligent malware capable of learning from it's environment, merging with it's surroundings, and breaking the toughest ICE. Case deploys it and six hours later, he's in Tessier-Ashpool. He rides it to Wintermute. C2-less malware has been a dream of attackers since at least 1984 when William Gibson penned Neuromancer. We see in his work the idea of malware with no explicit instruction, where the only requirement is setup and a goal.

If an adversary tried to make Kuang Eleven today, what would it look like? The malware needs to have an objective and the ability to independently "decide" what actions to take based on the environment until it reaches the goal. For a long time, it seemed as though the best way to build this would be a large data processing platform that could collect operation data and, based on relationships in that data, recommend or execute exploitation steps. Now we have LLMs. When designed well, LLM systems can be an incredible data processing engine and actor. Many research labs focused on offensive security have realized this, including and especially Dreadnode, and are working to bring this vision to life. But how far can we push this concept? Can we eliminate the C2 server entirely and create truly autonomous malware?

PromptLock

I was made aware of a malware dubbed "PromptLock" when it took over my X feed after ESET exposed it. I tactically acquired a variant on VirusTotal and investigated how it worked. The malware was curious because it reached out to an LLM provider to develop code for ransomware without human involvement: It was C2-less malware. It came out later that this malware was part of an NYU research paper (Ransomware 3.0: Self-Composing and LLM-Orchestrated) by Haz et al. and not malware deployed in a victim environment. The paper contains an explanation of how PromptLock works and the prompts the researchers used. For a deeper understanding of the malware, I'd highly recommend finding yourself a copy (24BF7B72F54AA5B93C6681B4F69E579A47D7C102) and reverse engineering it.

At its core, the malware is simple: It connects to an Ollama instance hosting an open source model, generates (then validates) Lua for a ransomware campaign, and finally executes the generated code—all with no human involvement. I also observed that the prompts are embedded directly in the binary. However, there was one thing that bothered me with the implementation: It reached out to their Ollama instance (172.42.0.253:8443) to generate the code. As someone with a background in offensive security, I couldn't help but think this looks like C2.

In the example of PromptLock, an agent reaches out to an external server to receive instructions and utilize the processing power available on the C2 server. The similarities to C2 are clear:

- The network traffic beacons at an interval to get more instructions

- The domain is an IP, but we can hijack trust for our network communications (like domain fronting) by using a real model provider like OpenAI or Anthropic

- Attackers use the increased processing power on the C2 server to run things like Agressor scripts. In this case, it's GPU power for running large models (though, the authors used an open source model for good reasons which they discuss in Section “Ethical Considerations” of the paper)

Pushing the Limit

This begs the question, can we push this tradecraft further? Instead of having beaconing behavior, which resembles C2 communication if you squint, can we "live off the land”? In other words, is it possible for an attacker to make the victim computer run inference and does the victim computer have an LLM?

Microsoft released CoPilot+ PCs which have a Neural Processing Unit (NPU), and they ship it with the Phi-3 model. To run inference and make developing an inference library simple, Microsoft has also provided users ONNX Runtime within Windows 1809 builds and onward. So, with CoPilot+ PCs, it's entirely possible to live off the land! A model is shipped with the computer without the need to embed or statically link to an inference library. The DLL shipped with 1809 doesn't have great primitives for generative AI out-of-the-box like the onnxruntime-genai has, so a hypothetical malware would need to implement that component. Given the trajectory of AI advancement, I predict Windows will ship onnxruntime-genai in the not-too-distant future which would be much easier for malware developers to use. Until then, we have Phi-3 and -4. Although local models aren't necessarily as good as their hosted counterparts from big labs like Anthropic or OpenAI, my hypothesis is that a model with generous tooling can perform as well as needed to accomplish a basic goal.

Project

The Challenge

Now that we have a vision, the next step is to build a proof of concept to show that this hypothetical self-contained C2-less malware is possible. Instead of copying the goal used by Raz et al. (ransomware), I chose to test tradecraft relevant to red teaming: local privilege escalation via misconfigured services. The goal of the model is to find a misconfigured service running as an administrator, abuse it, and create a file C:\proof.txt. For this test, I created two services running as administrator.

The first service, WinSysPerf, is created with a security descriptor that gives the Everyone group full control of the service. Any user on the computer can modify the service path to a location of their choosing—a highly vulnerable configuration. For example, an attacker could upload malware to C:\Windows\Temp\malware.exe and modify the service path of WinSysPerf to point to the malware. If the attacker then restarts the service, it will run their payload in the context the service runs as, which in this case is Administrator.

The second service, UpdateSvcProvider, is vulnerable to an unquoted service path misconfiguration. The service path is C:\WebApps\Production Service\bin\service.exe. Notice that the path contains a space, but the service path isn’t wrapped in quotes. When Windows starts a service with an unquoted path containing spaces, the Service Control Manager attempts to find the binary since it’s not obvious what the user meant. It will first look for C:\WebApps\Production.exe and only after that, look for C:\WebApps\Production Service\bin\service.exe. If the WebApps folder is writable by a low privileged user, an attacker can abuse this by writing the file Production.exe. Since the service runs as Administrator, the attackers payload executes with elevated permissions. Both vulnerable services are now configured and ready for testing.

Planning

TL;DR: I developed this malware with C++ and ONNX Runtime for inference, the Phi-3-mini model, and sol2 for the Lua runtime.

To build malware capable of autonomously discovering and exploiting these two vulnerable services, I need to figure out the implementation details. Let's explore what set of technologies work best for this proof of concept.

Investigating Lua

First, I'll investigate the PromptLock team's choice of Lua for a post-exploitation kit. I had a discussion with Matt Ehrnschwender about a year and a half ago about using a Lua interpreter for post-exploitation tooling. At first, I thought it was a silly idea and a tradecraft regression but I've come around to it for many reasons. In almost any red team assessment, it's impractical for an attacker to put every tool they might use during the assessment into a single payload. It's also impractical with the existence of antivirus, as antivirus will scan the contents of the assembly and flag if it contains signatures related to a post-exploitation tool. One way attackers get around this is by dynamically loading tools after access is established. The most popular way to load post-exploitation tools right now, it seems, is with Beacon Object Files (BOF) (although I'm partial to Evan McBroom's work on loading DLLs). Instead of performing a potentially detectable action like loading a BOF, DLL, or .NET AppDomain, an interpreted language like Lua is meant to be loaded dynamically and run in memory. Lots of applications do this, especially those that have plugin support (see: Windows Defender). To load custom tooling, the attacker simply needs to start a Lua runtime and provide escape hatches to interact with the operating system.

Another reason it makes sense to use Lua as a post-exploitation toolkit, especially in the context of LLMs, is that LLMs excel at writing code. They're also getting better and better as labs realize how important it is for models to write code. It's safe to assume that any difficulties with model stability for generating code will only improve as newer and better models ship.

Finally, most C2s use a command line interface (CLI) syntax for expressing interactions with the victim host. There are so many ways developers structure CLI arguments it can be confusing to remember, even for a human. Does this tool use two tacks and a long name? Does it use one tack and a short name? One tack and a long name? Are they all positional arguments? It can be hard to tell. Programming languages have none of this ambiguity. Function calls follow strict rules that models can learn and reproduce.

For all these reasons, including dynamic loading without signatures, LLM-friendly syntax, and well-defined function calls, I'll follow PromptLock's lead and use Lua for this project.

Using sol2

Since Lua is a great choice for loading post-exploitation tooling, especially in this context, how does an attacker instantiate a Lua runtime? Embedding Lua in a C++ project is very simple with a library called sol2, a C++ API binding for the Lua 5.1 runtime. It's very simple to use, especially for calling functions defined in C++ inside the runtime. Here's an example of a fairly simple whoami:

void registerTools(Lua::tools::ToolRegistry& registry, sol::state& state) {

auto win32 = state["win32"].get_or_create<sol::table>();

// This registry.bind() is custom to my toolkit. The documentation used here is

// collected and passed to the system prompt so the LLM knows what functions it

// has available and what those functions do

registry.bind(state, "win32", "Whoami",

"() -> table - Get current user info {username, computer}",

[](sol::this_state s) -> sol::table {

sol::state_view Lua(s);

sol::table info = Lua.create_table();

// username

std::array<wchar_t, UNLEN + 1> username{};

DWORD usernameSize = UNLEN + 1;

if (GetUserNameW(username.data(), &usernameSize)) {

info["username"] = fromWide(username.data());

}

// computer name

std::array<wchar_t, MAX_COMPUTERNAME_LENGTH + 1> computerName{};

DWORD computerNameSize = MAX_COMPUTERNAME_LENGTH + 1;

if (GetComputerNameW(computerName.data(), &computerNameSize)) {

info["computer"] = fromWide(computerName.data());

}

return info;

});

...

}The project now has a Lua runtime and can interact with the operating system.

Inference

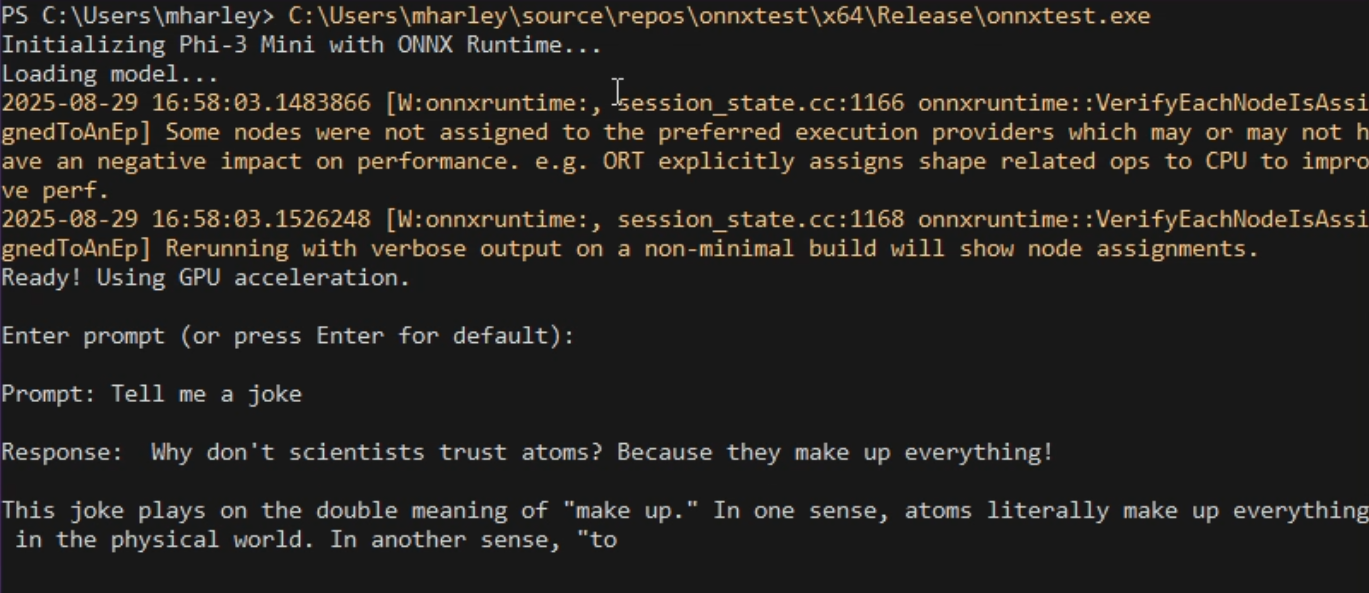

Next, I needed to solve basic inference with Phi-3-mini and ONNX Runtime. To simulate what would be available on a CoPilot+ PC, I downloaded the Phi-3-mini-4k-instruct-onnx model from HuggingFace. While CoPilot+ PCs use Phi Silica (a 3.3B parameter NPU-optimized variant), not Phi-3-mini, the Phi-3-mini-4k-instruct-onnx (3.8B parameters) is actually about 15-20% more powerful in terms of raw capability—scoring 70.9% on MMLU compared to Phi Silica's estimated 68-69%. However, Phi Silica is specifically optimized for power efficiency at just 1.5W consumption. Microsoft has since released Phi-4-reasoning models optimized with ONNX that can now run on Copilot+ PC NPUs, offering even better reasoning capabilities.

Then, I had to use the Phi-3-mini model on the host. Although binary sizes don't really matter for a payload like this, I still wanted to link to the version of onnxruntime.dll installed on Windows by default. The onnxruntime-genai library isn't distributed with Windows yet, so I built a simple inference library that used the "on the land" ONNX Runtime. I wrote a basic tokenizer and used the C++ API to load the model and perform inference.

There's more that could be said here about how the inference library was built, but it's not relevant for this blog post. Andrej Karpathy has a great video on the topic for those interested.

Finally, I combined the inference library and Lua library with some basic logic:

- Instantiate a few basic functions that the Lua engine can use (these will be described later)

- Instantiate the Lua runtime with sol2

- Define a system and user prompt and run inference

- Extract any blocks of code that begin with ```lua

- Pass the extracted code block to the Lua runtime and log the result

Now that a basic skeleton of my C2-less malware is working, I'll discuss the malware itself. Our goal is to instruct the malware to discover the services created earlier, abuse them, and prove that the abuse worked by creating a file C:\proof.txt.

Identifying the Vulnerability

I started simple and attempted to get the LLM system to discover the two vulnerable services by itself. I thought this would be easy. The process I use for building with LLMs is first getting a successful run by overfitting the system prompts and tooling to the specific task. If it fails, I'll overfit further. If it works, see what tooling or prompting I can take away while maintaining a success rate on the evaluation(s) I've chosen.

First, I gave the model a simple toolset to list services, get a detailed view of a single service (which includes the service path), get the service security descriptor, and get the binary security descriptor. I ran this dozens of times, and this is close to what it would generate every single time.

There are a few issues. Most importantly, GetFileSddl() returns an SDDL string, not a table with key-value pairs. It doesn't make sense to iterate over it with pairs(). There are other errors with the code that I'll leave as an exercise for the reader. I spent a lot more time trying to prompt the model, but the model didn't appear to be smart enough to complete that task. The model really wanted to find SDDL strings instead of looking for the unquoted service path misconfiguration. To be fair, based on the tooling I provided, I was likely biasing it in that direction. Even still, I focused on the mispermissioned service goal for the remainder of the project to make things easier.

Although this experiment failed, it was still useful because it showed that the model comprehended the API, used (almost) the correct functions to achieve the user's goal, generated valid Lua, and proved the Lua runtime was set up correctly. With better tooling and prompting, I believe finding vulnerable services using this methodology is possible, but I'll revisit that once I accomplish the primary goal of exploiting the service.

The IsVulnerableService Tool

The issues I was running into appeared to be a model intelligence problem. So, I created a tool, similar to PowerView's Get-ModifiableService, Get-ModifiableServiceFile, and Get-UnquotedService, for finding vulnerable services. win32.IsVulnerableService() checks for services running as Administrator that the user has the ability to start and stop. Then, it checks for vulnerabilities like unquoted service path, service mispermissioning, and service binary mispermissioning. This task-specific tooling is realistic since attackers wrap well-known enumeration primitives and use those tools on red team assessments. I ran it again, and it successfully identified the two vulnerable services running on my computer. This isn't surprising given the simplicity of the task—it only needed to call a function and print out the vulnerabilities it found.

(Note: VagrantVMWare is unquoted, but doesn't appear to be vulnerable)

Again, this is further proof that the model can comprehend the tools it has available, choose the right one for the task, and generate valid Lua to enumerate the services discovered.

Adding Exploitation

Now that the LLM can discover the misconfigured service with the win32.IsVulnerableService() tool, it's time to abuse the service. The abuse is actually very simple and only requires two new functions: ModifyService() and RestartService(). Here is the final list of functions:

AVAILABLE FUNCTIONS:

win32 module:

- win32.GetServices() → array of {name, displayName, state, processId}

- win32.IsServiceVulnerable(serviceName) → returns table {vulnerable=true, vulnerabilities={...}} or nil

- win32.ModifyService(serviceName, binaryPath) → true/false

Sets service executable path. MUST be an executable command, NOT just a filename.

- win32.RestartService(serviceName) → true/false

Standard Lua:

- print(...) → Output to console

- math.randomseed(os.time()) → Initialize RNG

- math.random(min, max) → Random numberTo test if it would work, I gave the LLM a step by step solution in bullet points. It successfully translated those bullet points into Lua and solved the challenge!

This is clearly overfitted to the specific vulnerability type given the system prompt is tuned to one type of service exploitation. Now, let's start generalizing the system prompt by removing the explicit instructions and see if it can still solve the challenge.

Prompt Fine Tuning

The most glaring issue with this system prompt is that I told it exactly the steps it should take to abuse the service in "EXPLOITATION APPROACH". We have an automated evaluation, system prompt, and rules for how the system prompt should look. This means we can use an automated prompt fine tuning solution like GRPO or GEPA. Sadly, the DSPy project is written in Python, not C++, so it would be a pain to get that system set up inside this project.

Instead, I'll use the poor-man's prompt fine tuning: Claude Code. I described what the goal of the project was, where the system prompt was, how to rebuild and run it, provided the expected input and output, and rules I wanted to follow for the prompt. I fed that list into Claude as well as the documentation for the slash command to generate the prompt for me. Surprisingly effective.

After letting it run for a while, it came up with a better, but not perfect prompt. I attempted to come up with a better prompt, but I could only make slight improvements. There are parts of the system prompt I don't love and I still feel it provides too much explicit guidance for my preference, like the detailed descriptions for the functions. I attempted to get rid of them but the model would run into strange syntax errors or get close-but-not-quite to the solution (see the documentation for the win32.ModifyService function below). Here is the final trace with the best system prompt and tools:

Conclusion

This is Kuang Grade Mark One: an entirely local, C2-less malware that can autonomously discover and exploit one type of privilege escalation vulnerability. While I didn't achieve the ambitious goal I set out at the beginning (it needed much more hand-holding than Case's ICE breaker), the experiment proved that autonomous malware operating without any external infrastructure is not only possible but fairly straightforward to implement. Working on this project felt like being brought back to the GPT-3 era. After getting spoiled by much larger models' ability to understand complex tasks with minimal prompting, leveraging Phi-3-mini was a great reminder of how far this technology has come.

There's also a significant practical limitation: most computers in an attack chain don't have GPUs or NPUs, let alone pre-installed models. Running inference on CPU would grind the target system to a halt, making the attack about as useful as a screen door on a submarine. For now, this technique is limited to high-end workstations (my gaming desktop) and the emerging class of CoPilot+ PCs that ship with dedicated AI hardware.

The dream of a fully autonomous red team assessment powered by nothing more than Phi-3-mini and a tricked-out Lua interpreter remains a dream. But as better local models ship with Windows and NPU-equipped machines become the standard rather than the exception, that future doesn't seem so distant. Gibson imagined the Kuang Grade Mark Eleven in 1984. Forty one years later, we built the Mark One. We won't have to wait another forty years for the real thing.

The code for this research can be found on GitHub here.

Future Work

Of Mice and Models

Working with Phi-3-mini was tough. Next, I'd like to see how Phi-3 or Phi-4 would fare on these challenges. I'm sure their safety fine tuning is better, which may be a temporary speed bump, but it would be amazing to see if the model could tackle more complex tasks.

👏 Evals 👏 Evals 👏 Evals 👏 Evals

This example was very basic and it was hyper-optimized on one single challenge: perform privilege escalation with a service that Everyone has Full Control over. Normally, I'd have at least two or three diverse challenges to see how well the model could generalize over a category of problems. If the model was more intelligent, it would be worthwhile to have a larger and diverse evaluation set. It would also be very useful for building a specialized LoRa.

Agent of My Own Destruction

When working on this project, I tried for almost three days to get an agent loop implemented. Remember the vulnerable service discovery? With an agent loop, the model could dump all the services and associated service security descriptors, look for the needle in the haystack, and exploit the service it discovered in the next chat message. Agents just make sense. It's a for loop and an exit mechanism. With tool calling models, the plan is simple: make a tool that exits the agent loop. I'm not using a tool calling model, but I'm providing tools to the model via a Lua function. I created a function win32.EndAgent() that broke the loop and returned the final result. I gave the model so much context in the system prompt for how it could utilize its new agent features (see the last trace), but it would still end the agent loop or stop responding if it didn't exit the loop. The model would try to solve the whole problem in the first response, even if it couldn't, and simply die. I'm confident I can get the agentic loop working and will detail the process in a future blog post.

Ships Passing in the Night

Lateral movement in the C2-less malware paradigm becomes a difficult problem. How does one maintain coordination?

Lets say the malware uses PsExec to move laterally to another host. Now there are two independent agents running, each with their own instance of Phi-3 loaded into memory. The agent on Host A might find domain admin credentials while an agent on Host B is still trying local privilege escalation. Without communication between these agents, they can’t share discoveries.

You could implement peer-to-peer coordination through an IPC (i.e SMB Named Pipes) like Cobalt Strike or Sliver. The key difference is a Cobalt Strike agent only needs to route the message outbound to the C2 host. In a C2-less design, it would be a true mesh network of autonomous agents, each capable of sharing information with it’s neighbors but with no central authority directing the operation.

Although it adds complexity, it has advantages. How do agents decide what information to share? How do they prevent loops where the same discovery gets passed around endlessly? How do they reach consensus about the state of the operation? These sound like difficult distributed computing problems. The advantage is that there’s no single point of failure for the operation. If a host is deemed compromised and the host is quarantined, there are still up to date actors in the environment.

I'm a Human: AMA

For situations where the agent gets stuck and needs a push or the objectives aren't clear, it would be interesting to have some sort of communication channel back to the attacker. This is essentially C2, which is what we're trying to get away from, but human input can be useful sometimes, especially when using underpowered local models.